8 min read

05/08/2025

The Language Technologies AI Lab... in the Mediterranean

The world has been captivated by the impressive capabilities of large language models since the release of ChatGPT 3.5 in December 2022. It was the first time humans experienced a true cognitive interaction with a machine. Until then, our interactions had been limited to calculators, video games, some clever algorithms, or slow-moving robots. In my early analysis in 2023, "How the New ChatGPT Will Send Ripples of Change Through the World as We Know It", I listed some jobs that I estimated would be affected. Many more analyses have followed as we have tested the real possibilities of the technology and its many limitations. The fear of job replacement was already present in the first wave of analysis, and many C-level staff saw LLMs or GenAI as a cost-cutting tool (meaning staff). Wrong.

Organizations went into shock, and the message from the C-level was equally clear yet ambiguous: "We have to do something with GenAI." Two years on, billions of dollars invested (a lot of it wasted), and after many failures and disappointments, markets and organizations are beginning to recognize the realistic uses and also the practical limitations of a technology that AI Labs like Pangeanic pointed to: GenAI is not a bad technology, but without a deep understanding, many expectations are not realistic.

Transformers are a very solid and successful technology. However, many decisions and "AI-first" initiatives have been made based on those initial “cognitive impressions”, drawn from personal experiences with an LLM-based chat system and assuming that the individual experience will scale. Snake oil sellers continue to advertise "human-parity reasoning capabilities." Still, in reality, all we're doing is utilizing massive amounts of computing, extensive data recall capabilities, and substantial summarization capabilities, with a significant percentage of possibilities for something to go wrong. Only in 2025 are we realizing the transformative potential of a different approach: Small Language Models (SLMs). At Pangeanic, we aim to be at the forefront of this shift. Our recent seal award as an "R&D intensive company" until 2028 by the Spanish Ministry of Science, Innovation and Universities bears witness to our frontier, yet realistic AI Lab R&D efforts.

What is an AI Lab and how does it work in the language technology field?

An AI Lab in the language technology field serves as a dedicated research hub where interdisciplinary teams explore the frontiers of natural language processing and artificial intelligence. Unlike conventional technology departments or typical AI consultancies that integrate existing models through APIs, a genuine AI Lab operates with a dual mandate: pursuing fundamental research while developing practical applications that solve real-world language challenges.

The operational framework of a language technology AI Lab involves three essential components. First, we maintain a strong research foundation where linguists, data scientists, and AI specialists collaborate to develop novel algorithms and models for natural language understanding. This interdisciplinary approach ensures our solutions address both the computational and linguistic complexities of human language. Second, we implement rigorous testing environments that validate theoretical concepts against real language data before deployment, ensuring reliability and performance in production settings. Third, we establish partnerships with high-computing centers, such as the Barcelona Supercomputing Center, and academic institutions, including Valencia's Polytechnic School of Pattern Recognition and Human Language Technology, from which several of our staff have received training, as well as other European universities. And, of course, industry stakeholders, to ensure our research remains both scientifically sound and commercially relevant.

What distinguishes leading AI Labs like Pangeanic is our ability to bridge the gap between theoretical research and practical implementation. While many organizations treat AI as merely a feature to add to existing products, we approach language technology as a complex ecosystem that requires specialized expertise across computational linguistics, machine learning, and domain-specific knowledge. This holistic perspective enables us to develop solutions that go beyond surface-level language processing to address deeper linguistic structures and cultural contexts.

We don't just create AI solutions—we serve as strategic partners who help organizations navigate the complex landscape of language AI with realistic expectations and purpose-built tools that address specific business challenges.

Research-driven innovation: Beyond the hype cycle

Our recent recognition as an R&D intensive company by the Spanish Ministry of Science, Innovation and Universities until 2028 reflects a fundamental commitment to advancing the field rather than simply capitalizing on current trends. This award seal isn't ceremonial. It represents a rigorous evaluation of our research contributions in the last few years, our innovation pipeline, and our commitment to pushing the boundaries of language technology.

|

In practical terms, this means our AI Lab operates with a longer time horizon than typical AI companies. While others chase quarterly results by implementing the latest OpenAI API, we're developing the technologies that will define the next phase of language AI, and that is why the Ministry's seal lasts until 2028. Our research spans multiple areas, including few-shot learning techniques for low-resource languages. These domain adaptation methodologies enable the rapid specialization of general models for specific industries, as well as efficiency optimizations that make advanced language AI accessible to organizations without massive cloud budgets. |

This research focus also informs our consulting and implementation work. When we engage with clients,we do not just deploy existing solutions; we often create new approaches tailored to their specific challenges. This may involve developing custom training datasets for AI, creating novel evaluation metrics for domain-specific performance, or architecting hybrid systems that combine multiple AI techniques to achieve optimal results. An example of this is specific, automated MTQE and LQE methods developed for particular clients and their verticals.

A legacy of innovation: From statistical MT to Deep Adaptive AI

Our journey began in 2005, when our CEO, Manuel Herranz, carried out a friendly buy-out from the Japanese corporation he had represented in Europe since 1999. We were among the first companies in the world to adopt and customize Moses, the pioneering open-source Statistical Machine Translation system, creating the first self-training MT engines. (There are presentations still available online where our CEO, Manuel Herranz, explained the concept of self-training machines back in 2011!!) This early adoption of cutting-edge technology established a pattern that continues today: we don’t follow trends... we help create them.

Our role as a Language Technologies AI Lab is perhaps best illustrated through our participation in groundbreaking European projects like

- NTEU (Neural Translation for the EU): We led a consortium with KantanMT and Tilde to create the largest neural machine translation engine farm ever developed—506 different near-human quality engines covering all official EU languages. This €2 million project, funded by the Connecting Europe Facility, eliminated the need for English as a pivot language, enabling direct translation between any EU language pair. The impact was transformative: Estonian could now be translated directly to Portuguese, or Maltese to Greek, with unprecedented accuracy and cultural nuance.

- iADAATPA to MT-Hub: Our consortium developed what became the automatic translation platform for EU Public Administrations, supporting the digital modernization efforts across member states. It served as a backup to the EU's translation during its early days for capacity building, translating more than 10 million words in a few months. This project demonstrated our ability to create secure, scalable solutions that handle sensitive governmental communications while maintaining the highest standards of data protection.

- NEC TM Data Platform: We developed the National European Central Translation Memory Data Platform, creating a bridge between public administrations and translation service providers to promote better translation memory data-sharing practices and optimize translation workflows across Europe.

- MAPA Project: As pioneers in multilingual data anonymization, we developed the world’s first multilingual anonymization software based on multilingual BERT, addressing critical privacy concerns in the age of GDPR and data protection regulations. The solution is still in use by the European Commission in Luxembourg on-premises for sensitive documents.

These projects were more than technical achievements. They were foundational building blocks for the multilingual digital infrastructure that Europe relies on today, as well as larger projects like Europeana Translate, AI4Culture, and the current Mosaic Media Project, where we introduce novel AI workflows at five European broadcasters. More importantly, these projects positioned Pangeanic beyond the language technology service provider status and closer to a research and development powerhouse capable of tackling the most complex challenges in language technology.

Barcelona Supercomputing Center and PRHLT partnerships: Advancing the science of language AI

Our collaboration with the Barcelona Supercomputing Center (BSC) and the Pattern Recognition and Human Language Technology School at Valencia's Polytechnical University represents the cutting edge of our research activities. Together, we’re advancing NLP and AI technologies with a focus on data annotation, Reinforcement Learning from Human Feedback (RLHF), bias detection, and R&D projects in language technologies. Our AI Lab helped BSC to create ethical datasets for the first series of Catalan LLMs in the world, partly financed by the Spanish government in their push to respect and preserve low-resourced languages within Spain. Until then, the Catalan language was considered an under-resourced language, close to "endangered" status, together with Basque and Galician - other co-official languages in Spain. Our push for low-resourced languages and language equality also led us to collaborate in several EU-sponsored initiatives like ELE, with significant contributions in the advancement of machine translation in official and non-official EU languages.

This partnership has been instrumental in developing our understanding of how to create ethical, robust Large Language Models while ensuring responsible AI development. From bias detection to translation evaluation and annotation, our PECAT tool helped the supercomputing center optimize training data. The datasets Pangeanic created and curated have contributed to training some of Europe’s most advanced language models, including BSC’s own Aina and Salamandra models—work that directly feeds into our forthcoming Small Language Model initiatives. The Pattern Recognition and Human Language Technology School at Valencia's Polytechnical University is a frequent partner in regional and national R&D programs and a source for talent acquisition.

Looking ahead: The next decade of language technology

As we look toward the next decade, several technological and market trends will shape the evolution of language technology, all of which align with Pangeanic's strategic direction as a specialized AI Lab. These trends tend to feature in market studies and analysis by McKinsey, Gartner, and CSA Research.

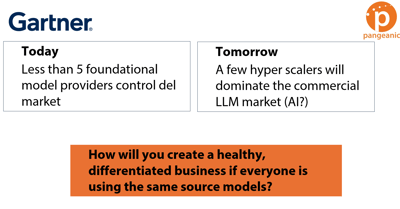

- Democratization of AI: The shift toward Task-Specific Small Language Models, as predicted by Gartner, represents a broader democratization of AI capabilities, making advanced language technology accessible to organizations of all sizes. After 4 mentions in Hype Cycles and Market Guides, Pangeanic is very well-positioned to lead and be ahead of the market, building next-generation solutions. Rather than requiring massive computational resources, purpose-built SLMs (Small Language Models) will deliver superior domain-specific performance on standard infrastructure... even home PCs and small servers. This will add a true sense of "ownership" of the technology, with a differentiating factor for companies, and also lead to massive energy savings. Companies and organizations that only build on or integrate external solutions will find it difficult to differentiate themselves from competitors. In contrast, those that build their solutions will have the knowledge and wherewithal to create value-added custom solutions for their clients and verticals.

- Regulatory Evolution: Increasing regulatory attention to AI will favor solutions that provide transparency, auditability, and local control—all strengths of specialized, on-premise systems (or private SaaS systems). Our European leadership position and experience with regulated industries position us ideally for this shift.

- Cultural Preservation: As globalization continues, there will be increasing recognition of the importance of preserving and promoting linguistic and cultural diversity through technology, from Africa to Asia and Latin America. True multilingual AI must understand cultural context, not just linguistic patterns.

- Edge Computing: The continued improvement in local computing capabilities will make sophisticated on-premise AI deployment increasingly practical and cost-effective, reducing dependence on cloud-based services and improving response times.

- Industry Specialization: The most valuable AI systems will be those that understand specific industry contexts, regulatory requirements, and business processes. Generic AI solutions will give way to specialized tools that integrate seamlessly with existing workflows.

Pangeanic's position as a Language Technologies AI Lab puts us at the center of all these trends, with the experience, partnerships, and technical capabilities needed to shape the future of business language technology. As our VP of Revenue, Amando Estela, notes, "The future of communication is multilingual and borderless. At Pangeanic, we're not just translating text; we're facilitating deep cultural connections across the globe with a fluency that was once thought to be beyond the reach of machine translation."

The AI revolution in language technology is just beginning, but it won't be led by the companies making the most noise about general intelligence. AI Labs will drive it like Pangeanic, combining cutting-edge research with practical expertise, proven performance at scale, and industry recognition from authorities like Gartner. The future of AI is not about replacing human intelligence—it's about augmenting human capabilities with reliable, specialized tools that make communication across languages and cultures more effective than ever before.

Why choose Pangeanic as your language technologies AI Lab partner

When organizations consider AI and language technology, they often focus on technical specifications—such as model parameters, training data size, or processing speed. But the real value lies in the expertise behind the technology and the proven track record of innovation. Pangeanic's multiple recognitions by Gartner underscore our leadership across the entire language AI ecosystem: featured in the Hype Cycle for Language Technologies (2023), recognized in both the Hype Cycle for Natural Language Technologies (2024) and as a Representative Vendor in the Market Guide for Data Masking and Synthetic Data (2024), and most recently acknowledged in Gartner's "Emerging Tech: Conversational AI Differentiation in the Era of Generative AI" report (2025). These acknowledgments span from our pioneering work in Neural Machine Translation to our innovative approaches in data privacy, synthetic data generation, and optimized language models for conversational AI.

Pangeanic is recognized and fully aligned with the trends that will drive technology solutions in the coming decade

This recognition from one of the industry's most respected research organizations underscores what sets Pangeanic apart in the competitive landscape of language AI. Gartner's latest report highlights explicitly Pangeanic's innovative approach to "improving the accuracy and reliability of GenAI-enabled offerings" through advanced model optimization techniques. Our ECO platform exemplifies the key differentiators Gartner identifies as crucial for market leadership: optimized language models, advanced Retrieval-Augmented Generation systems, and comprehensive multi-modal capabilities supporting over 200 languages.