7 min read

03/11/2025

What Are Auto-regressive Models? A Deep Dive and Typical Use Cases

If you have used ChatGPT, Claude, DeepSeek (not trained on $5M), Gemini, or any modern public and not private Generative AI tool to write an email, summarize a report, or translate a document, you have interacted with an auto-regressive model. I'd like to explain this concept in today's blog post so users understand what is behind the technical jargon: the fundamental engine driving the most advanced artificial intelligence in history.

We will provide an authoritative, deep dive into what autoregressive models are, how they work, why they are different from other models, and how they power the services you use every day.

As a leader in AI-driven language technologies, we at Pangeanic believe that understanding this core technology is essential for any business and decision-makers looking to harness the power of Generative AI and not become part of the 80%-90% "AI implementations that fail" according to McKinsey and Gartner. Forbes reported similar rates in 2024 because of quality data-for-AI training issues.

1. The core definition: What does "auto-regressive" mean?

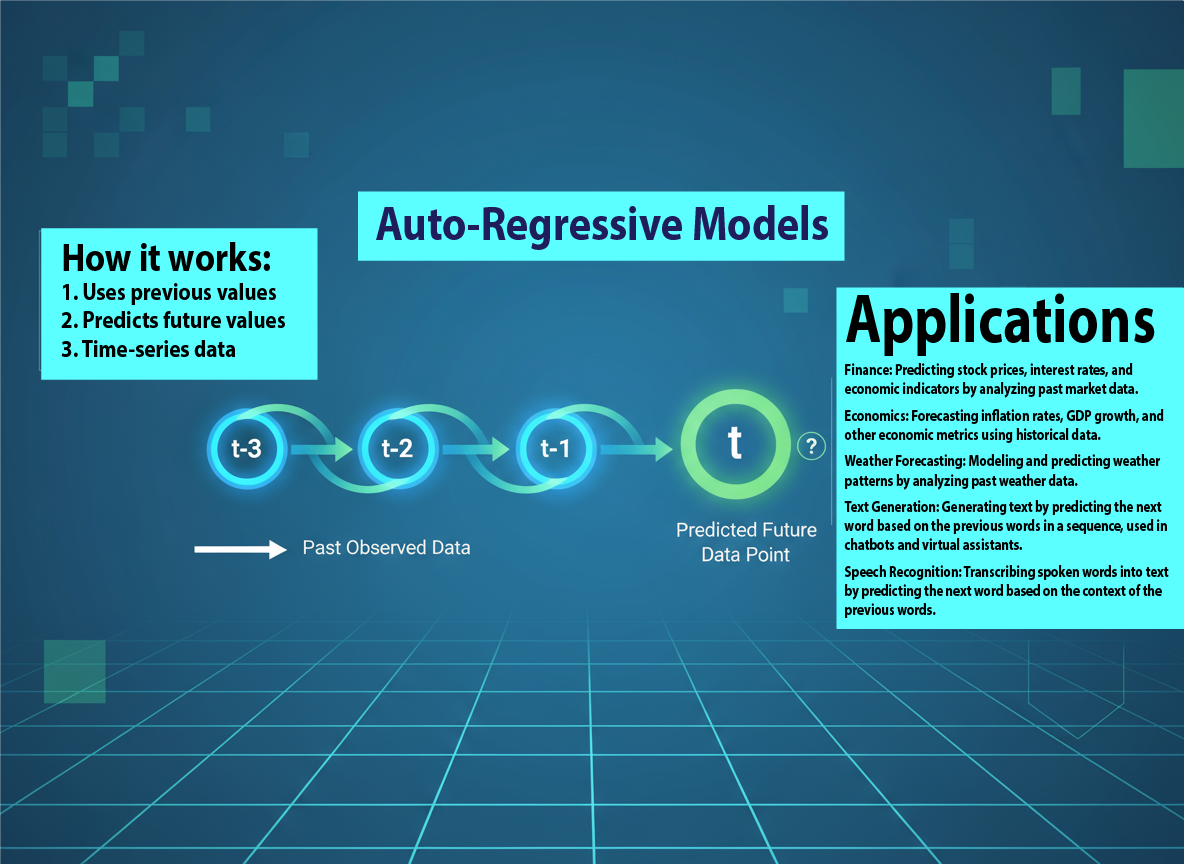

At its simplest, an autoregressive model is a type of AI that generates new data by predicting the next step in a sequence based on all previous steps.

Let's break down the word:

- Auto: It comes from the Greek "self." In our case, this means that the model relies on its own previous outputs.

- Regressive: This comes from "regression," a statistical method used for prediction. The same type of prediction you first used in tools like Gmail and others, some years ago.

In essence, the model is performing a "regression on itself." It looks at the sequence it has already produced and asks, "Based on everything I've said so far, what is the most probable next token/word?"

Think of it as an expert storyteller writing a sentence:

- It starts with a prompt: "The quick brown fox..."

- It predicts the following word: "...jumps."

- It adds this word to the sequence: "The quick brown fox jumps..."

- It uses this new, longer sequence as input to predict the next word: "...over."

- It repeats this process, token by token, until it generates a complete, coherent thought.

In reality, this is practically all the magic of the basic concept. This step-by-step, sequential generation is the defining characteristic of an auto-regressive model. What you build on top of it (things like chatbot systems, new data inputs crawling the web, speech recognition and conversations, chat history, projects, document uploads for reference in an ad-hoc RAG system, etc.)... they are all computer engineering.

And as with all computer engineering, it can be replicated.

2. How auto-regressive models work under the hood

While the concept is simple, the mechanism is incredibly complex, powered by the Transformer architecture, specifically the "decoder" part. You can find out about Transformers in our 2023 post "What Are Transformers in NLP: Benefits and Drawbacks".

This is a simplified breakdown of the process:

- Input & Probability: The model takes an initial sequence of tokens (words or fragments of a word) as a prompt. It processes this sequence and outputs a list of probabilities for every single possible token in its vocabulary that could come next.

- Sampling: The model "samples" from this probability list to choose the next token. (It usually picks the most probable one, or one of the top few, to introduce some creativity). This is a common misunderstanding by humans and a cause of "dubious answers" sometimes, as not the clearest "top choice" is chosen as an answer, leading for example, to wrong terminology in translation, information that is unexpected or not so relevant as an answer ... they are part of the inherent hallucinations of auto-regression.

- Iteration (The "Auto" Loop): This is the crucial part. The newly chosen token is appended to the end of the input sequence. The entire new sequence is then fed back into the model to predict the next token.

- Masked Self-Attention: The clever trick inside the Transformer decoder. To force the model to be auto-regressive (remember, Transformers were not designed to do this, that is, to only look at the past), it uses a mechanism called "masked self-attention." This mask essentially blocks the model from "seeing" any tokens that come after the one it is currently trying to predict.

- Stop Condition: This loop continues until the model predicts a special "end-of-sequence" token or reaches a pre-defined length limit.

The mathematical idea is to model the probability of an entire sequence, P(x), by factoring it into a chain of conditional probabilities:

In plain English, the probability of a whole sentence is the probability of the first word, times the probability of the second word given the first, times the probability of the third word given the first two, and so on.

3. A key distinction: Auto-regressive (GPT) vs. autoencoding (BERT)

A common source of confusion is the distinction between autoregressive (AR) and autoencoding (AE) models. Understanding this distinction is key to understanding the AI landscape and the potential application of AI at an enterprise level, for example, in adaptive automatic translation, as recently completed by Pangeanic in July 2025.

|

Feature |

Autoregressive (AR) Models |

Autoencoding (AE) Models |

|

Example |

GPT-4, Gemini, Claude, DeepSeek, etc. |

BERT, RoBERTa |

|

Primary Goal |

Generation (Creating new, coherent text) |

Understanding (Analyzing existing text) |

|

How it "Sees" Text |

Unidirectional: Sees only the past (tokens 1 to t-1) to predict the future (token t). |

Bidirectional: Sees the entire sentence at once (past and future) to understand context. |

|

Core Task |

"Predict the next word." |

"Fill in the blank." (Masked Language Modeling) |

|

Analogy |

A novelist writing a story one word at a time. |

An editor reading a full paragraph to find a missing word. |

At Pangeanic, we leverage both types of models. Autoencoding models are excellent for tasks such as sentiment analysis, document classification, and Named Entity Recognition (NER). Auto-regressive models are the powerhouses we use for our generative services, but with a crucial layer of control.

4. Typical use cases, applications, limitations, and the terminology problem

Autoregressive models are widely used in many fields because of their ability to predict future values from past observations. Here are some typical applications of autoregressive models:

- Finance: Predicting stock prices, interest rates, and economic indicators by analyzing past market data.

- Economics: Forecasting inflation rates, GDP growth, and other economic metrics using historical data.

- Weather Forecasting: Modeling and predicting weather patterns by analyzing past weather data.

- Text Generation: Generating text by predicting the next word based on the previous words in a sequence, used in chatbots and virtual assistants.

- Speech Recognition: Transcribing spoken words into text by predicting the next word based on the context of the previous words.

- Large Language Models (LLMs): Tools like ChatGPT are "general-purpose predictors," capable of answering questions, writing code, and drafting essays.

- Machine Translation: A model takes a source sentence and generates the translated sentence word by word.

- Audio and Speech Generation: AI voices are generated one audio "sample" at a time, with each new sample conditioned on the previous ones.

The auto-regressive principle is the foundation of nearly all modern Generative AI. These applications demonstrate the versatility and effectiveness of auto-regressive models in various domains, making them a valuable tool for data analysis and prediction, with an inherent risk of hallucination as part of their predictions: its greatest strength (creative, sequential generation) is also its greatest weakness for professional use.

5. The critical limitation: Lack of control

Now we begin to understand that standard autoregressive models have significant limitations for high-stakes enterprise applications, and that we need to realistically assess what can be done and what cannot, set expectations, and define clear, achievable KPIs and step-by-step implementation plans. That is, small pilots in controlled environments. Some of the limitations that need to be taken into account are:

- Error Propagation: Because each new word depends on all previous words, a single bad prediction (a "hallucination" or a mistranslation) early in the sequence can "snowball," leading the model further into factual error.

- Lack of "True" Planning: The model doesn't "plan" a complete response. It is, at its core, a "next-word predictor." This means it cannot be instructed to adhere to strict rules, such as a specific glossary or terminology list. If you need a planned answer, your engineering team needs to implement other techniques like Chain-of-Thought, Tree-of-Thought (ToT) and Graph-of-Thought (GoT), which allow models to explore multiple reasoning paths simultaneously,

backtrack from dead ends and recombine partial solutions, use external tools or databases dynamically, forming the basis for agentic AI systems.

At Pangeanic, these ideas converge, for example, into our agentic quality-assurance (QA) methodology for Deep Adaptive AI Translation or MTQE, enabling AI models not only to generate but to plan, verify, and reflect on their output before delivery. - The "Terminology Blind Spot": A general-purpose LLM trained on the entire internet does not know your company's specific product names, the precise legal definitions in a contract, or the mandated terminology for a pharmaceutical filing. When it generates a response, it will choose the most probable word from its general training, not the correct word for your specific domain.

This is unacceptable in sectors like healthcare, pharma, legal, or finance, where a single incorrect term can have massive legal or financial consequences.

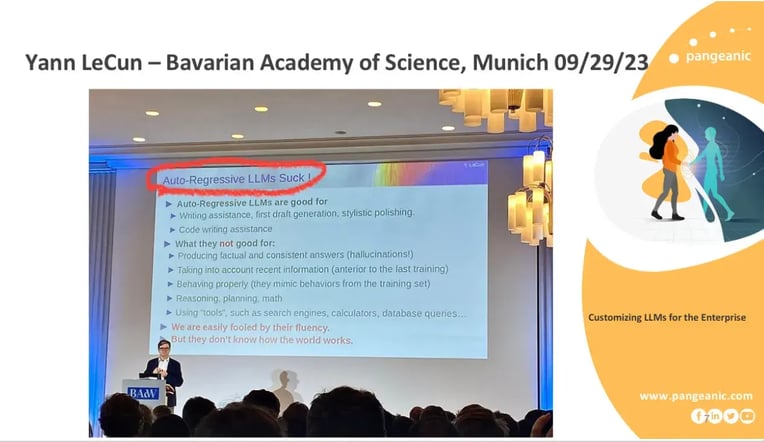

6. Yann LeCun on auto-regressive models

I have followed Yann LeCun's take on AI the LLM fever for years. For those who are not familiar with the "who is who" in AI, Yann LeCun is Turing Award and currently Meta's VP President and Chief of AI). He is a prominent figure in artificial intelligence, recognized for his significant contributions to deep learning and along with Geoffrey Hinton and Yoshua Bengio, was awarded the coveted award in 2014 (the highest honor in the field of computer science). I quoted him on several occasions and presentations over the last few years, to the dismay of many.

Yann believes that auto-regressive Large Language Models (LLMs) are a fundamentally flawed approach to achieving higher artificial intelligence or AGI (Artificial General Intelligence). He argues they are incapable of true reasoning and understanding, and has actively advised against working on them, pointing instead to alternative architectures like world models.

I've summarized Yann's core critique against auto-regressive LLMs and the alternative path he advocates.

|

Aspect |

Yann LeCun's Critique of Auto-Regressive LLMs |

Proposed Alternative |

|

Core Problem |

Inherently Flawed Architecture: Token-by-token generation leads to exponentially compounding errors, making long, coherent, and correct responses improbable.[1], [2] |

World Models: Systems that learn an internal model of how the world works through observation, similar to humans and animals. |

|

Key Limitations |

Lack of Reasoning & Planning: LLMs are purely "reactive" (System 1 thinking) and cannot engage in deliberate reasoning, planning, or imagine the consequences of actions.[3], [4], |

Joint Embedding Predictive Architecture (JEPA): A non-generative model that predicts in an abstract representation space, learning the dynamics of the world without getting bogged down in unpredictable details. |

|

Poor Understanding of Reality: Trained only on text, a low-bandwidth and discrete data source, they lack a grounded understanding of the physical world that a child learns through vision and interaction. [5] |

Hierarchical Planning: Breaking down complex tasks into manageable sub-goals, a capability current AI systems lack but is essential for human-level intelligence. |

|

|

Unreliability & Uncontrollability: They "make stuff up" (hallucinate) and cannot be made reliably factual or safe for critical applications. |

Energy-Based Models (EBMs): A framework where the model learns to minimize a cost function, making systems more controllable and aligned with specified objectives. |

|

|

His Direct Stance |

"Auto-Regressive LLMs are doomed". He has stated, "Don't work on LLMs," and that "from now on 5 years, no sane person will use auto-regressive models". |

He is pursuing this vision at Meta with models like V-JEPA for video understanding, aiming to create AI that can learn how the world works from observation alone. |

What This Means for the Future of AI

LeCun's position is a fundamental challenge to the current AI mainstream. His arguments suggest that simply scaling up existing LLMs will not lead to human-level AI, as I recently commented on. Instead, he envisions a shift towards architectures that can learn from vast amounts of sensory data (like video) to develop common sense and a practical understanding of reality, which he sees as the proper foundation for intelligence. Now, this is one side of an ongoing scientific debate. Other prominent researchers and companies continue to invest heavily in LLMs, and some research, such as a DeepMind paper, argues that auto-regressive LLMs are computationally universal.

Key takeaways:

At Pangeanic, our two decades serving law enforcement, government, and enterprise clients have taught us that AI is a powerful tool, not a magic solution. Auto-regressive models represent remarkable engineering, but they require careful implementation, proper constraints, and realistic expectations. The path forward isn't blind adoption or wholesale rejection, but intelligent integration: combining the generative power of these models with robust control mechanisms, domain-specific fine-tuning, and rigorous quality assurance. Success in enterprise AI doesn't come from chasing hype, it comes from understanding the technology's true capabilities and limitations, then building accordingly.