8 min read

19/02/2025

Machine Translation Quality Estimation: A comprehensive analysis

The demand for fast, accurate, and reliable translation services has never been higher. As organizations expand globally, the volume of content requiring translation has grown exponentially, making traditional human-only translation workflows unsustainable. This is where Machine Translation Quality Estimation (MTQE) emerges as a crucial technology in the new, AI-driven automated workflows, helping organizations optimize their global content delivery strategies and understand their clients while ensuring quality translations at scale. A solid, company-wide Machine Translation deployment is not, after all, as easy as plugging into an LLM!

Why MTQE matters in today's world

Let's face it – we're all dealing with more international communication than ever. Whether you're a business expanding globally, a researcher collaborating with international colleagues, or simply trying to understand content in another language, the need for reliable machine translation is everywhere. But here's the challenge: how do you know if a machine translation is good enough without a human expert checking every piece? This is where MTQE steps in, acting like an intelligent quality control system that can predict how good a translation will likely be before any human looks at it. It's like having a knowledgeable assistant who can quickly tell you which translations need more attention and which are ready to go. At Pangeanic, we pride ourselves on the virtuous cycle that Deep Adaptive AI Translation creates, which led us to two consecutive mentions in Gartner's Hype Cycle of NLP Technologies Neural Machine Translation 2024 and 2023.

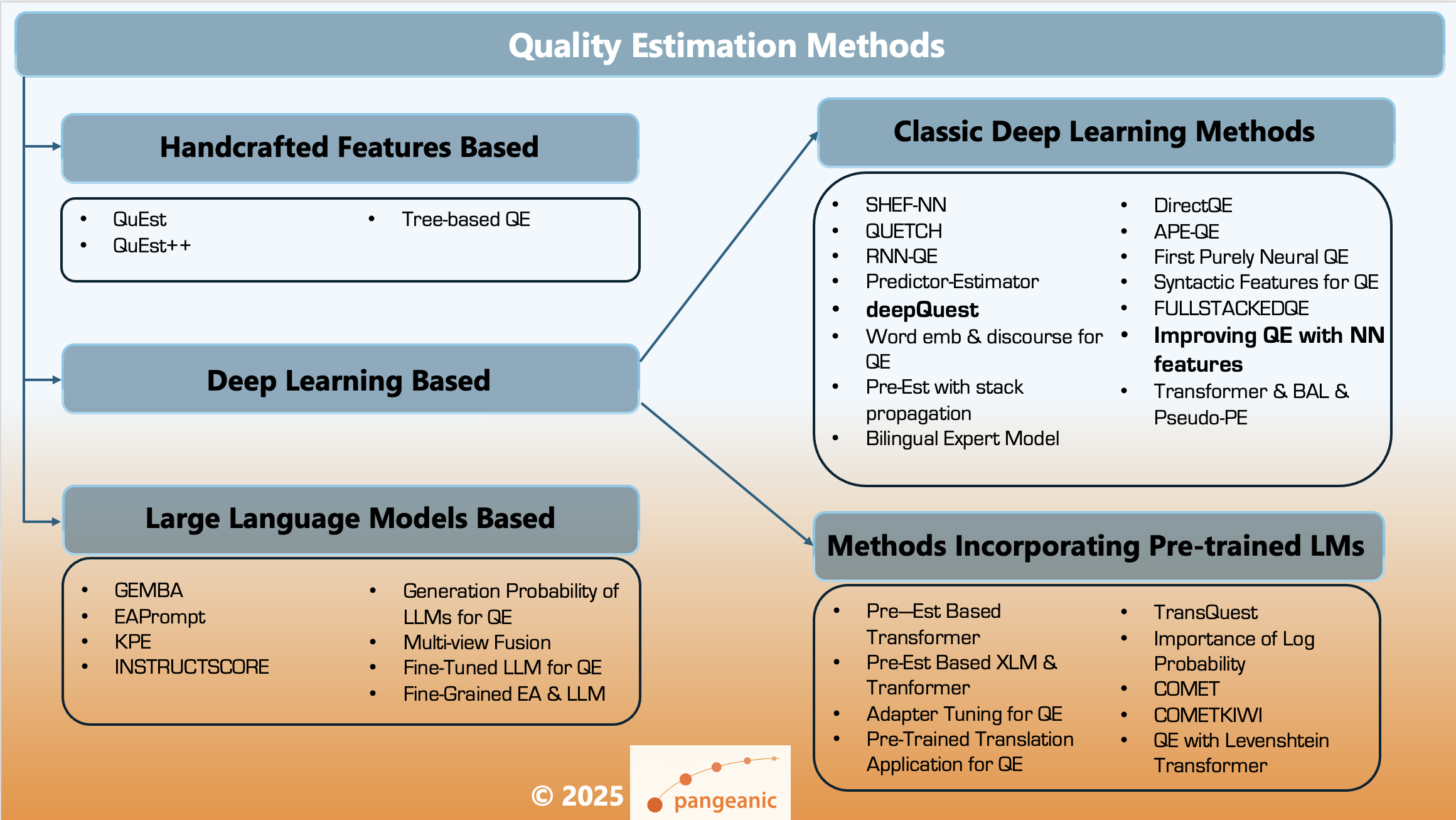

The evolution of MTQE

The journey of MTQE is fascinating because it mirrors our own evolving relationship with technology. In its early days, MTQE was like a basic spell-checker, looking at simple patterns and rules to guess if a translation was good. It would check whether all the words were translated and the grammar looked roughly correct. But just as our smartphones have gotten smarter, so has MTQE. Today's systems are more like language experts, understanding context, nuance, and even cultural references. They use advanced artificial intelligence to analyze translations in surprisingly human-like ways. The latest systems, powered by the same kind of technology that drives many AI Models, can even understand subtle meaning differences and idiomatic expressions.

A prime example of modern MTQE is CometKiwi, the winner of WMT22 Quality Estimation and the current gold standard for MTQE. Most current enterprise MTQE systems use it and fine-tune it to their purposes. CometKiwi's MTQE represents the pinnacle of translation quality assessment with features such as:

-

Predictor-Estimator Architecture (to evaluate translation quality at the sentence and document levels)

-

Word-Level Sequence Tagging (to identify problem areas within translations)

-

Few-Shot Learning (to adapt quickly to new languages and text domains)

-

Explanation Extraction (to provide insights into translation quality predictions)

Traditional reference-based MTQE

Traditional reference-based metrics evaluated translation quality before machine translation quality evaluation. This evaluation relied on comparing translations to human-generated references. Commonly used metrics included:

- BLEU (Bilingual Evaluation Understudy): A metric based on an algorithm that measures word overlap but lacks context awareness. It was initially developed for statistical machine translation. It assesses the quality of machine-translated text by comparing it to human translations. The core principle is that the closer a machine translation is to a professional human translation, the better its quality. BLEU was among the first metrics to correlate strongly with human judgment and remains widely used due to its automation and cost-effectiveness. It evaluates individual translated segments (typically sentences) against high-quality reference translations, averaging scores across the entire corpus to estimate overall translation quality. However, BLEU does not consider factors like intelligibility or grammatical correctness. It is still a helpful indication of a model's performance over time.

- TER (Translation Edit Rate): This metric, also known as Translation Error Rate, quantifies the number of edits (e.g., insertions, deletions, substitutions, and shifts) needed to transform a machine-translated output (hypothesis) into a reference translation. It is designed to be an intuitive and efficient measure for evaluating machine translation quality, avoiding the complexity of meaning-based approaches and the labor-intensive nature of human judgments. TER calculates the amount of editing a human would need to make the system output match the reference exactly.

TER is implemented in tools like sacreBLEU, inspired by TERCOM, and supports input formats such as SGML (NIST format), XML, or Trans. It has been shown to correlate well with human judgments of translation quality, often performing as well as or better than BLEU, even with fewer references. A variant called Human-Targeted TER (HTER) further improves correlation with human judgments, outperforming metrics like BLEU and HMETEOR in some cases. TER and HTER are effective, automated alternatives for assessing machine translation quality.

- METEOR (Metric for Evaluation of Translation with Explicit Ordering) is an automatic metric for evaluating machine translation quality by comparing a machine-generated translation to human reference translations. It uses a generalized concept of unigram matching, considering exact word matches, stemmed forms, synonyms, and morphological variants. METEOR calculates a score based on a combination of unigram precision, unigram recall, and a measure of fragmentation, which assesses how well-ordered the matched words are in the machine translation compared to the reference. METEOR improves upon simple precision-recall metrics by incorporating these additional matching strategies and fragmentation measures. It has shown a higher correlation with human judgments of translation quality compared to basic unigram-based metrics, achieving Pearson R correlation values of 0.347 for Arabic-to-English and 0.331 for Chinese-to-English datasets.

An Overview of MTQE over the years

An Overview of MTQE over the years

While these approaches were valid and helpful at the time, they had several limitations:

- Dependence on human reference translations.

- Inability to account for context and semantic nuances.

- Limited applicability in real-world scenarios.

What makes modern MTQE so powerful?

Modern Machine Translation Quality Estimation is all about a team of experts diving into a translation from different angles. It starts with a close look at individual words and phrases—like someone going through a document and marking any iffy word choices. These systems use language models to ensure the vocabulary, grammar, and potential mistranslations hit the mark and stay true to the original meaning. Then, the team zooms out to check entire sentences, making sure they flow well and make sense in the context. This includes examining sentence structure, idiomatic expressions, and overall coherence to ensure the translation reads as if it were originally written in the target language. And there’s more! The most sophisticated systems can even evaluate entire documents to maintain consistent style and ensure specialized terms are used correctly.

Most systems are open source, meaning companies can improve and fine-tune the baseline using approved, certified bilingual corpora or files containing human edits in post-editing.

The precision of Machine Translation Quality Estimation (MTQE) is critically important in fields such as law, medicine, and technology, where attention to detail is paramount. Furthermore, contemporary MTQE systems can improve through feedback, enhancing their accuracy over time. MTQE functions like a team of editors, each concentrating on various aspects of the translation process, including word choice, grammar, tone, and industry-specific language. This collaborative approach ensures that translations are precise, contextually appropriate, and professionally rendered.

MTQE enhances translation workflows

MTQE systems offer several advantages that make translation easier and more efficient. One main benefit is the ability to check translation quality practically in real time (this requires hosting on a high-end CPU or GPU).

This means translators get immediate feedback on accuracy without needing reference translations. This quick feedback helps decide if human review is necessary, which speeds up the translation process. MTQE also reduces costs by lessening the need for human reviewers. It allows better resource use and speeds up the publication of multilingual content. Besides saving money, MTQE plays a key role in ensuring quality. It helps keep translations consistent, finds potential errors before they go live, and enhances customer satisfaction by delivering more accurate translations.

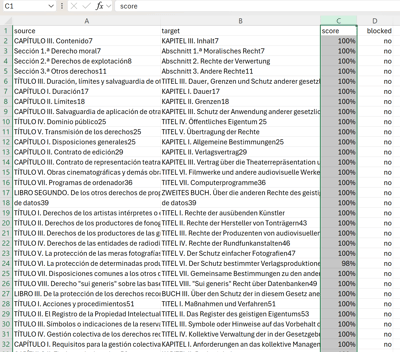

|

|

MTQE runs segment by segment and offers a document-level estimation score. Users can hone in low-confidence segments either on a csv file or as additional information in an xliff file. |

The challenges we're still facing

However, MTQE is not without its flaws. The number one issue is that it relies heavily on good data to learn from, especially for less common languages. It’s like learning a new language: the more you practice, the better you get. MTQE systems need extensive exposure to various language examples to nail down accuracy. If they don’t have a robust dataset with a mix of linguistic inputs, they can stumble in accurately assessing translations, especially for low-resourced languages with fewer digital or textual resources. This lack of data can lead to less effective quality checks for languages that receive less support, widening the gap between well-resourced and underrepresented languages.

Another challenge is that these systems sometimes recognize when a translation is off but can’t easily explain what’s wrong. It’s akin to hearing a sentence that sounds odd or out of place, but you can’t quite put your finger on why it feels that way. This lack of clarity can make it difficult for human translators to trust the feedback they receive, as they lack a clear understanding of the specific issues that need addressing. MTQE often struggles to spot context-based errors, such as nuanced tone shifts, cultural references, or idioms that require a deeper understanding of the culture and context in which they are used.

These subtleties are crucial for accurate translation, as they can significantly alter the intended meaning if not adequately understood and conveyed. Adding to the mix is the "black box" problem of many AI systems, where even the creators can struggle to understand how decisions are made. This opacity in the decision-making process makes it tricky to improve the system's accuracy over time, as developers and users alike are left guessing about the internal workings and logic of the AI. Tackling these issues means pushing for more research into clearer, explainable AI models that can provide insights into their decision-making processes. Additionally, collecting high-quality data across multiple languages and contexts is essential to effectively train these systems, ensuring they can handle the diverse linguistic and cultural nuances they encounter. This comprehensive approach will help build more reliable and transparent MTQE systems that can be trusted to deliver high-quality translations.

"MTQE has become an essential step in an organization's strategy to automate translation processes. It is the first step to understand that the quality we are obtaining is good enough to let it go or send it to a human reviewer. But this is only the first step, and including an agentic LQA will close the circle for many localization teams. This type of control aligns very well with LangOps principles of professionals being in charge of new, reliable language processes rather than being considered passive localization teams." - Maria Angeles García Escrivà, Head of Machine Translation at Pangeanic

What this means for you

Whether you are a business owner, a content creator, or someone who frequently engages in translations, understanding the role of Machine Translation Quality Estimation (MTQE) is crucial. MTQE is a vital tool in enhancing the accessibility and reliability of quality translations, effectively bridging language barriers. By automating the initial phases of quality assessment, MTQE significantly reduces the time and resources needed for manual review, enabling businesses to expand their operations and reach global audiences more efficiently. It is important to recognize that MTQE is not intended to replace human expertise but aims to enhance and focus it. Human translators and editors can then dedicate their efforts to the more intricate aspects of language that machines may overlook, such as cultural context, tone, and creative expression. MTQE functions like an intelligent assistant, helping you allocate your time and energy effectively when managing translations by identifying areas that require attention and instilling confidence in those that meet high standards. This synergy of machine efficiency and human insight results in quicker turnaround times, cost savings, and, ultimately, higher-quality translations that resonate with diverse audiences.

As our Head of Machine Translation says above, MTQE has become a "must-have" for any organization or localization department that relies on automated processes while still involving humans. The next step is to include custom Linguistic Quality Assurance (LQA) that detects errors beyond language fluency and context, particular to each scenario, like terminology compliance.

Looking ahead

At Pangeanic, we are dedicated to breaking language barriers and fostering global communication through innovative translation technology. As part of this mission, we proudly announce the forthcoming release in 2025 of Deep Adaptive AI Translation v2, which merges Machine Translation Quality Estimation (MTQE) and Linguistic Quality Assurance (LQA) into a sophisticated, agentic workflow, even for enterprise-grade, complex PDF translation services.

This groundbreaking system will detect and correct context and fluency errors in machine translations and identify and address specific errors tailored to each client's unique requirements. By integrating these advanced technologies into an autonomous workflow, we aim to redefine the translation process, ensuring that every output faithfully preserves the intended meaning, context, and intent.

We believe the future of translation goes beyond merely converting words from one language to another—it’s about enabling seamless, accurate, and meaningful communication across languages. With each iteration, our evolving MTQE technology is at the forefront of this revolution, becoming more sophisticated and user-friendly. It works tirelessly behind the scenes to ensure that machine translations are not just translated but translated well.

At Pangeanic, we are committed to empowering businesses and individuals to communicate more effectively and confidently across language barriers. Our agentic workflow already detects and corrects errors for preview clients, delivering high-quality translations that meet the highest standards of accuracy and reliability.

We are proud to lead this evolution in translation technology, building bridges across languages and cultures in our increasingly interconnected world. Our innovative approach shapes a future where language is no longer a barrier but a gateway to global understanding and collaboration.

Together, let’s transform the way the world communicates.