9 min read

09/02/2024

Demystifying Mixture of Experts (MoE): The future for deep GenAI systems

Understand what a Mixture of Experts is how it works, and why it is behind the best LLM architectures.

Understanding the Concept of Mixture of Experts

The MoE structure appeared long before deep learning and AI became popular concepts. Initially proposed in 1991 by Robert A. Jacobs and Michael I. Jordan from the Department of Brain and Cognitive Sciences at MIT and Steven J. Nowlan and Geoffrey E. Hinton (Alan Turing prize winner, Head of AI at Google, a position he quit in May 2023) from the Department of Computer Science at the University of Toronto, the concept of “Mixture of Experts” (MoE) is a machine learning technique based on the use of massive amounts of data that involves training multiple models. The architecture adopts a conditional computation paradigm by only selecting parts of an ensemble known as ‘experts’ and activating them depending on the data or task. Each model or 'expert' specializes in a different part of the input space, so it can make a prediction for a given input. Predictions are then combined into a final output based on the experts' confidence levels. MoE, nevertheless, behaves as a single, big model.

How does a Mixture of Experts work?

MoE has been successfully applied in various applications across different fields. In natural language processing, MoE has been used for machine translation, sentiment analysis, and question answering tasks. By combining the predictions of multiple language models, MoE can improve translation accuracy and understand the sentiment of complex texts.

In computer vision, MoE has been utilized for object recognition, image captioning, and video analysis tasks. By combining the outputs of multiple models, MoE can accurately identify objects in images, generate descriptive captions, and analyze video content.

MoE has also found applications in recommendation systems, where it is used to personalize recommendations based on user preferences and behavior. By combining the predictions of multiple recommendation models, MoE can provide more accurate and diverse recommendations, leading to improved user satisfaction.

The benefits of using MoE in these applications include improved accuracy, robustness to complex data, and adaptability to different user preferences. MoE can capture complex patterns and relationships in the data, leading to more accurate predictions. It is also robust to diverse and noisy data, making it suitable for real-world applications. Additionally, MoE can adapt to user preferences by assigning weights to experts based on user feedback, providing personalized recommendations.

What is the purpose of a MoE (Mixture of Experts) in machine learning

The idea behind MoE is that different models may be better at making accurate predictions for specific subsets of the data so the ensemble can overcome the limitations of any individual model and provide better overall performance.

“We will see that small models with large context windows, improved attention, and access to private data repositories will be the future of AI. This allows AI systems to use tools like vector databases and knowledge graphs to leverage up-to-the-minute data, rather than relying only on static or outdated training.” - Manuel Herranz, CEO

How does a Mixture of Experts work

The MoE approach works by dividing the input data into several subsets, or "clusters," based on some similarity metric. Each cluster is then assigned to a specific expert model, which is trained to specialize in making predictions for that cluster. Each expert has been trained independently on a subset of the training data using a specific algorithm or architecture tailored to its particular strengths. For example, one expert might use a deep neural network with many layers to capture complex relationships in the data. In contrast, another uses a simpler linear regression model to handle straightforward cases.

Once all the experts have been trained, they are combined into a single system through a gating mechanism, which determines the weighting or importance assigned to each expert's output based on the input features and the confidence level of each expert.

When making a prediction, the MoE first determines which region the input falls into and then uses the corresponding expert model to make the prediction. This allows the MoE to leverage the strengths of different models for different parts of the input space, resulting in more accurate and robust predictions. The final prediction of the MoE model is a weighted combination of the projections from all the expert models. The gating mechanism typically determines the weights for the combination.

Key Components of Mixture of Experts

A Mixture of Experts consists of several key components that work together to make accurate predictions. These components include:

1. Experts: These are the individual models specializing in specific subtasks or subsets of the input space. Each expert is responsible for making predictions based on its specialized knowledge.

2. Gating Network: The gating network determines the contributions of each expert based on the input data. It assigns weights to each expert's prediction, ensuring that the most relevant expert's output is given more importance.

3. Combination Method: The combination method combines the predictions of all experts to form the final prediction. This can be as simple as averaging the outputs or using more sophisticated techniques, such as weighted averaging or stacking.

4. Training Algorithm: The training algorithm is responsible for optimizing the parameters of the experts and the gating network. It aims to minimize the prediction error and maximize the overall performance of the MoE model.

These key components work together to leverage the strengths of different models and make accurate predictions. By combining the outputs of multiple experts, MoE can capture complex relationships and improve the overall accuracy of predictions.

The Gate

The Gating Network or Gating Mechanism typically takes the form of a separate neural network that learns

This allows the system as a whole to adapt dynamically to new inputs and adjust the relative contributions of each expert accordingly. During training, both the experts and the gating mechanism are updated simultaneously to minimize the overall error across the entire dataset.

The gating network takes the input and outputs a vector of probabilities, which are then used to compute a weighted sum of the predictions from the expert models. If you think about it, this Mixture of Experts starts to reflect better how mammals’ brains operate, with distinct areas to process smell, vision, sound, etc. Our own brain activates certain areas for certain tasks: our brains are not “always on” at full capacity, but they are working on many tasks all the time. Neurologists have debunked the “10% Myth” a popular groundless belief that used to state that humans only used a small fraction of their brains and could gain magic superpowers only if the could awake the rest of the brain. Rather than acting as a single mass, the brain has distinct regions for different kinds of information processing. Decades of research have gone into mapping functions onto areas of the brain, and no function-less areas have been found.

The truth is that some areas are more “activated” when reading, others when preparing food and cooking, and others when we listen to music or are engaged in a deep conversation –but the whole brain is active even in periods of sleep and continuously exchanging information : regulating, monitoring, sensing, interpreting, reasoning, planning, and acting. Even people with degenerative neural disorders such as Alzheimer’s and Parkinson’s disease still use more than 10% of their brains.

In debunking the ten percent myth, Knowing Neurons editor Gabrielle-Ann Torre writes that using all of one's brain would not be desirable either, which is exactly how an MoE works (whilst several areas may be active for a task, only two agents are used for inference). If all the brain was unfetteredly used for all tasks, it would almost certainly trigger an epileptic seizure. Torre writes that, even at rest, a person likely uses as much of his or her brain as reasonably possible through the default mode network, a widespread brain network that is active and synchronized even in the absence of any cognitive task. Torre says that "large portions of the brain are never truly dormant, as the 10% myth might otherwise suggest."

The gating network acts almost like that, like the master of ceremonies, knowing which expert and area to trust more at each time: it’s not the same to focus on running as it is to writing an easy. It’s not same to request a system to translate Catalan as it is to request it to write python code or have it explain the concept of Council Tax in the UK.

Advantages of MoE

One key advantage of MoE over other ensemble methods like bagging or boosting is that it allows for greater flexibility and customization of the individual experts. By allowing each expert to specialize in a particular aspect of the problem space, MoE can achieve higher accuracy and better generalization than it would be possible with a single monolithic model that is “good for “everything”, be it a 7B, a 13B, 32B or 70B. This added complexity also comes with some drawbacks, such as increased computational requirements and potential difficulties in optimizing the gating mechanism.

MoE models have been shown to be effective in a variety of applications, such as natural language processing, computer vision, and speech recognition. They are particularly useful in situations where the data is complex and can be divided into distinct subsets, and where different models may be better suited to making predictions for different subsets.

One of the key benefits of MoE models is that they allow for more efficient use of computational resources. By specializing each expert model to a specific cluster, the overall system can be made more efficient by avoiding the need to train a single, monolithic model on the entire dataset. Additionally, MoE models can be more robust to changes in the data distribution, as the gating network can adapt to changes in the data and allocate more resources to the expert models that are best suited to handling the new data.

However, MoE models are a lot more complex to train and deploy compared to traditional machine learning models, as they require the training of multiple models and a gating network. Additionally, MoE models can be more prone to overfitting if the number of clusters or expert models is not chosen carefully.

One of the key benefits of MoE models is that they allow for more efficient use of model resources. By dividing the input space into multiple regions and assigning a different expert model to each region, the MoE can use a smaller, more specialized model for each region, rather than using a single, large, general-purpose model. This can lead to significant improvements in both computational efficiency and predictive accuracy.

Another benefit of MoE models is that they are highly flexible and can be easily adapted to a wide range of tasks and input domains. By adding or removing expert models, and by adjusting the gating network, the MoE can be easily customized to suit the needs of a particular task or application.

Training and Implementing Mixture of Experts

Training a Mixture of Experts involves two main steps: training the experts and training the gating network.

To train the experts, data is partitioned into subsets based on the subtasks or subsets of the input space. Each expert is trained on its corresponding subset, optimizing its parameters to make accurate predictions for that specific subtask.

Once the experts are trained, the gating network is trained using the input data and the experts' predictions. The gating network learns to assign weights to each expert's prediction based on the input data, ensuring that the most relevant expert's output is given more importance. The gating network is typically trained using techniques such as backpropagation or reinforcement learning.

Implementing a Mixture of Experts involves designing and implementing the experts, the gating network, and the combination method. The experts can be any machine learning model, such as neural networks, decision trees, or support vector machines. The gating network can be implemented using neural networks or other techniques that can assign weights to the experts' predictions. The combination method can be as simple as averaging the outputs or using more sophisticated techniques, depending on the specific application.

It is important to carefully tune the parameters and architecture of the experts and the gating network to achieve the best performance. Additionally, the training process may require a large amount of labeled data and computational resources, depending on the complexity of the problem and the models used.

Traditional versus Sparse MoE

The difference between traditional Mixture of Experts (MoE) models and sparse MoE models lies primarily in their architecture and how they allocate computational resources. Let's break down the key distinctions:

Traditional MoE Models:

1. Dense Expert Utilization: In traditional MoE models, a significant number of experts are typically active for each input. This means that for any given piece of data, multiple experts provide their outputs, which are then combined to produce the final result.

2. Computational Overhead: Because many experts are involved in processing each input, traditional MoE models can be computationally expensive. This can lead to inefficiencies, especially when scaling up the model for large datasets or complex tasks.

3. Uniform Expertise: Often, the experts in traditional MoE models are not highly specialized. They may have overlapping skills or knowledge areas, leading to redundant processing.

Sparse MoE Models:

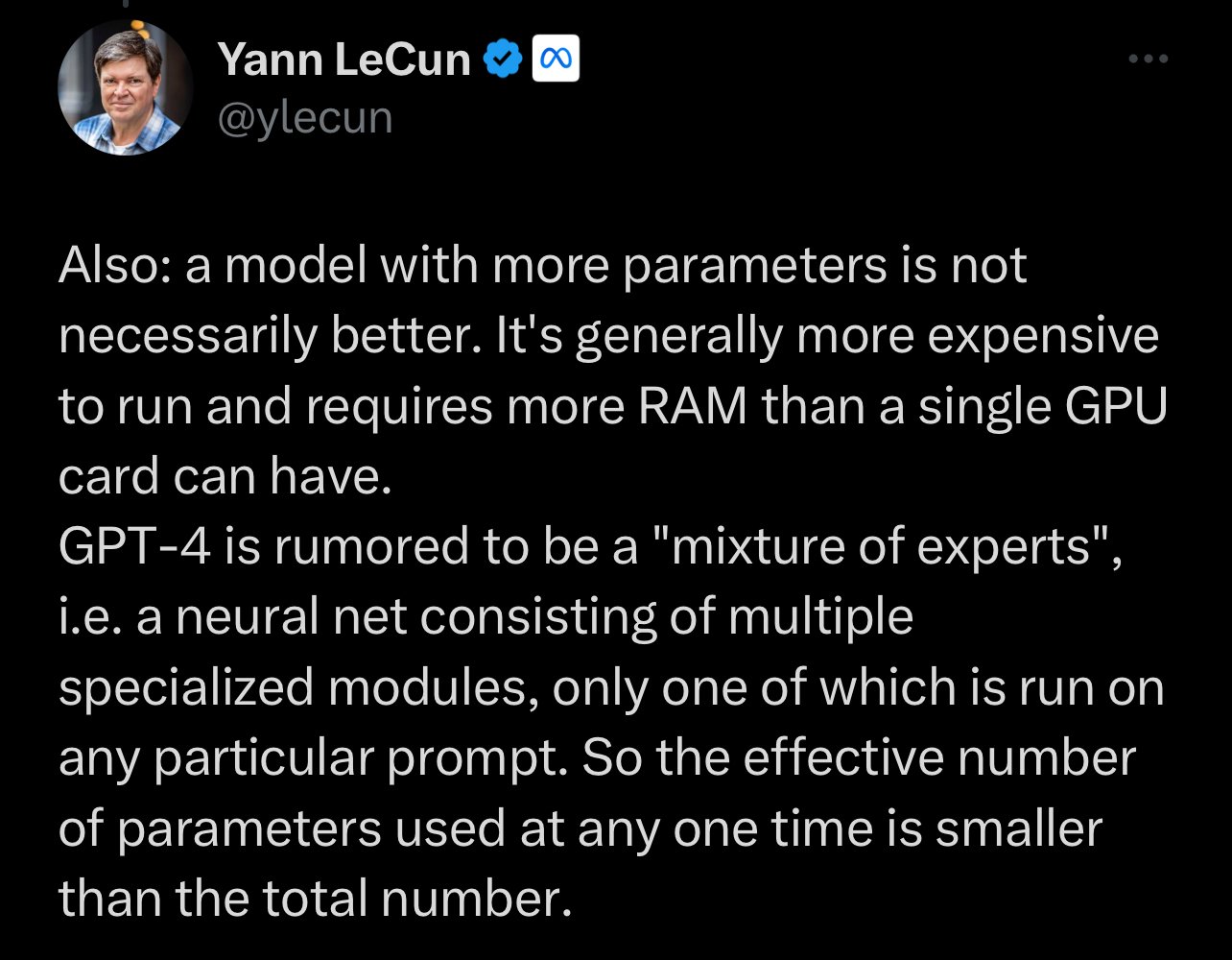

1. Selective Expert Activation: Sparse MoE models are designed to activate only a small subset of experts for each input. The model's gating network intelligently decides which experts are most relevant for the specific task at hand, thus reducing the number of active experts at any given time.

2. Efficiency and Scalability: The sparsity in expert activation makes these models more efficient and scalable. They are better suited for handling large-scale tasks because they reduce the computational load by only utilizing the necessary experts.

3. Specialized Expertise: In sparse MoE models, experts are often more specialized. Each expert is fine-tuned for a specific type of task or data, ensuring that the model leverages the most relevant knowledge and skills for each input.

4. Load Balancing and Resource Management: Sparse MoE models typically include mechanisms for balancing the load among different experts and managing computational resources more effectively. This is crucial in large-scale applications, where resource optimization is key and our experience with hosting at Pangeanic tells us it is essential to have a good, reliable load balancer when hundreds or thousands of users at peak times need to access your resources. (We are not in the millions of users race yet).

In short, while both traditional and sparse MoE models use a collection of experts to process data, sparse MoE models (the latest generation) are more efficient in how they utilize these experts. By selectively activating a limited number of highly specialized experts for each task, sparse MoE models achieve greater computational efficiency and scalability, making them more suitable for complex, large-scale applications.

Challenges and Future of Mixture of Experts

One challenge is the selection and design of experts. It is important to choose experts that complement each other and cover different aspects of the problem. Designing effective experts requires domain knowledge and expertise, as well as careful feature engineering.

Another challenge is the training and optimization of the gating network (gating mechanism). The gating network needs to learn to assign the appropriate weights to each expert's prediction based on the input data. This requires a large amount of labeled data and computational resources, as well as careful tuning of the network architecture and training algorithm.

The future of Mixture of Experts looks very promising and it is where Pangeanic is placing its efforts in customization. With advances in Deep Learning and AI, there are opportunities to improve the performance and efficiency of MoE models. Future research may focus on developing more advanced expert models, exploring new combination methods, and finding ways to reduce the computational requirements of training MoE models.

Overall, the Mixture of Experts architecture has the potential to revolutionize the field of deep GenAI systems by leveraging the strengths of multiple models and capturing complex relationships in the data. It is an exciting area of research with promising applications in various domains.